Most RAG implementations today are still limited to simple question-answering. The typical RAG chatbot requires humans to do most of the heavy lifting - reading through responses, synthesizing information, and producing final outputs like reports and analyses. But what if we can push our AI systems to do more?

Check out our hands-on video on report generation

What is Report Generation?

Report generation represents the next evolution in RAG-based systems. Instead of just answering questions, these systems can automatically produce complete documents - from research reports to presentations to analyses. They follow specific templates and style guidelines, incorporate properly formatted tables and diagrams, and make intelligent decisions about content organization. Most importantly, they can synthesize information from multiple sources into coherent narratives.

The impact of this capability is already being felt across industries. Investment firms are using report generation to create company analysis reports from earnings calls and SEC filings. Management consulting teams are synthesizing industry research into client-ready presentations. Technical teams are automating the creation of product documentation and API guides. Regulatory teams are generating RFP responses and compliance reports. Financial services firms are producing portfolio performance reports complete with charts and analysis.

This automation is transforming how knowledge work gets done. Organizations can reduce the time spent on routine document creation, ensure consistency across teams, and free up their experts to focus on high-value analysis and decision-making.

Report Generation Leads to Greater Time Savings

While enterprise search typically saves knowledge workers about 1-10 hours per month, report generation capabilities can save significantly more time. Based on common enterprise use cases like financial analysis reports, RFP responses, and technical documentation, we estimate report generation can save 10-15 hours per report by automating the initial drafting and formatting work. For teams producing dozens of reports monthly, this can translate to thousands of hours annually that can be redirected to high-value analysis and strategic work.

Core Building Blocks for Report Generation

These five building blocks represent our current understanding based on what we've developed and seen work in production. But we're just scratching the surface - as teams experiment with report generation, we're discovering new patterns and components that push the boundaries of what's possible.

1. Structured Output Definition

The foundation of any report generation system is a clear definition of what the output should look like. This starts with creating Pydantic schemas that define the structure of your report, including different types of content blocks and their relationships. Here's an example on generating a multimodal report:

python

class TextBlock(BaseModel):

text: str

class ImageBlock(BaseModel):

file_path: str

caption: str

class ReportOutput(BaseModel):

blocks: List[Union[TextBlock, ImageBlock]]

title: str

metadata: Dict[str, Any]2. Advanced Document Processing

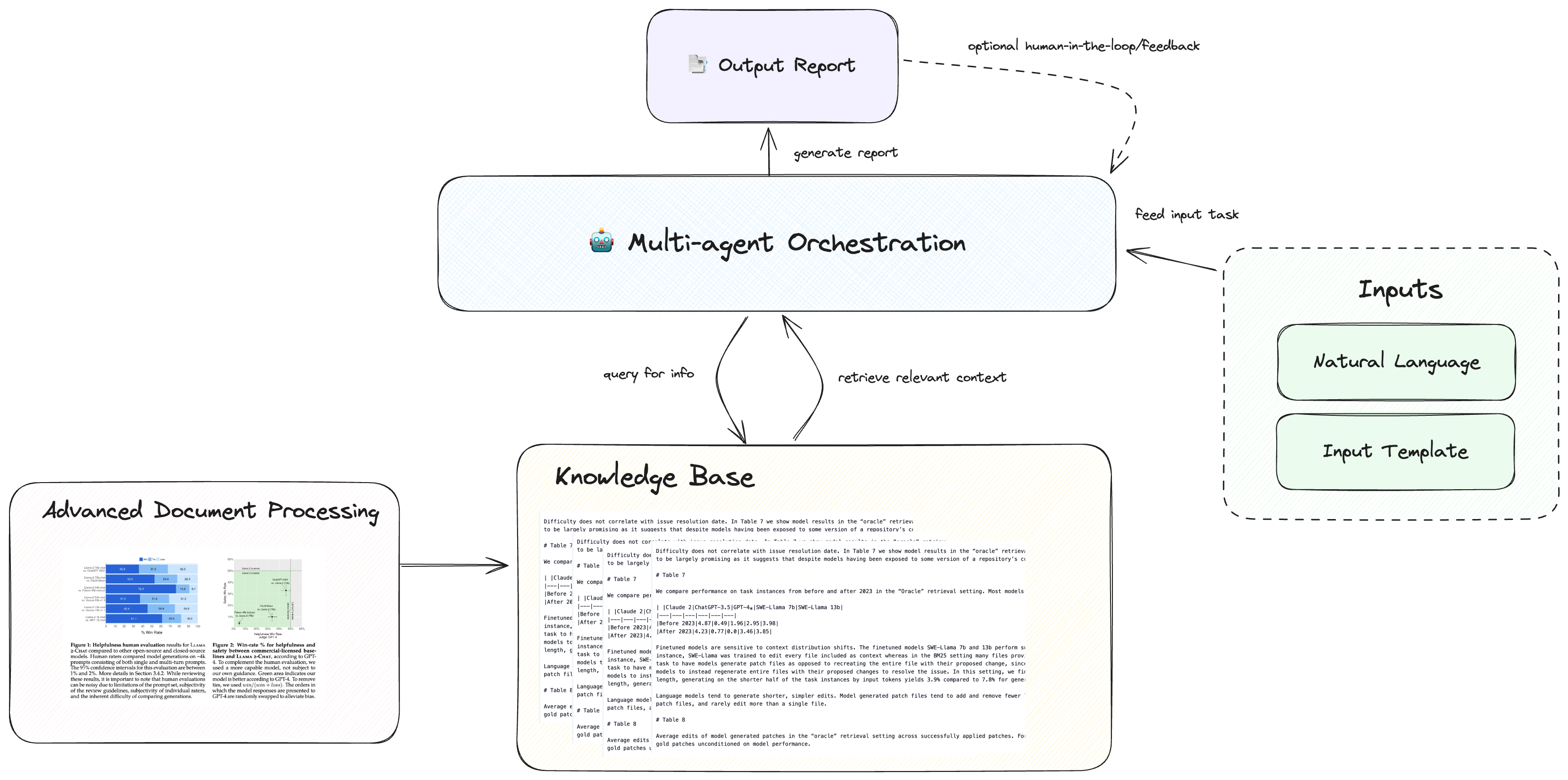

Report generation tasks oftentimes depend on unstructured document context both in the input (e.g. filling in an input template document) as well as in the knowledge base. These documents, which include PDFs, PPTX, XLSX, DOCX, and more, oftentimes contain complex elements like tables, charts, and images.

Gen-AI native parsers like LlamaParse can well-suited for this task. They are specifically designed to extract information from even the most complicated documents such that LLMs can understand them.

3. Knowledge Base Integration

The knowledge base is the engine that powers report generation. It needs to do more than just store and retrieve text - it must handle multimodal content, support various retrieval methods, and maintain metadata about information freshness and relevance. Your retrieval system should be able to understand document types, dates, and sources, providing efficient endpoints for different reporting needs.

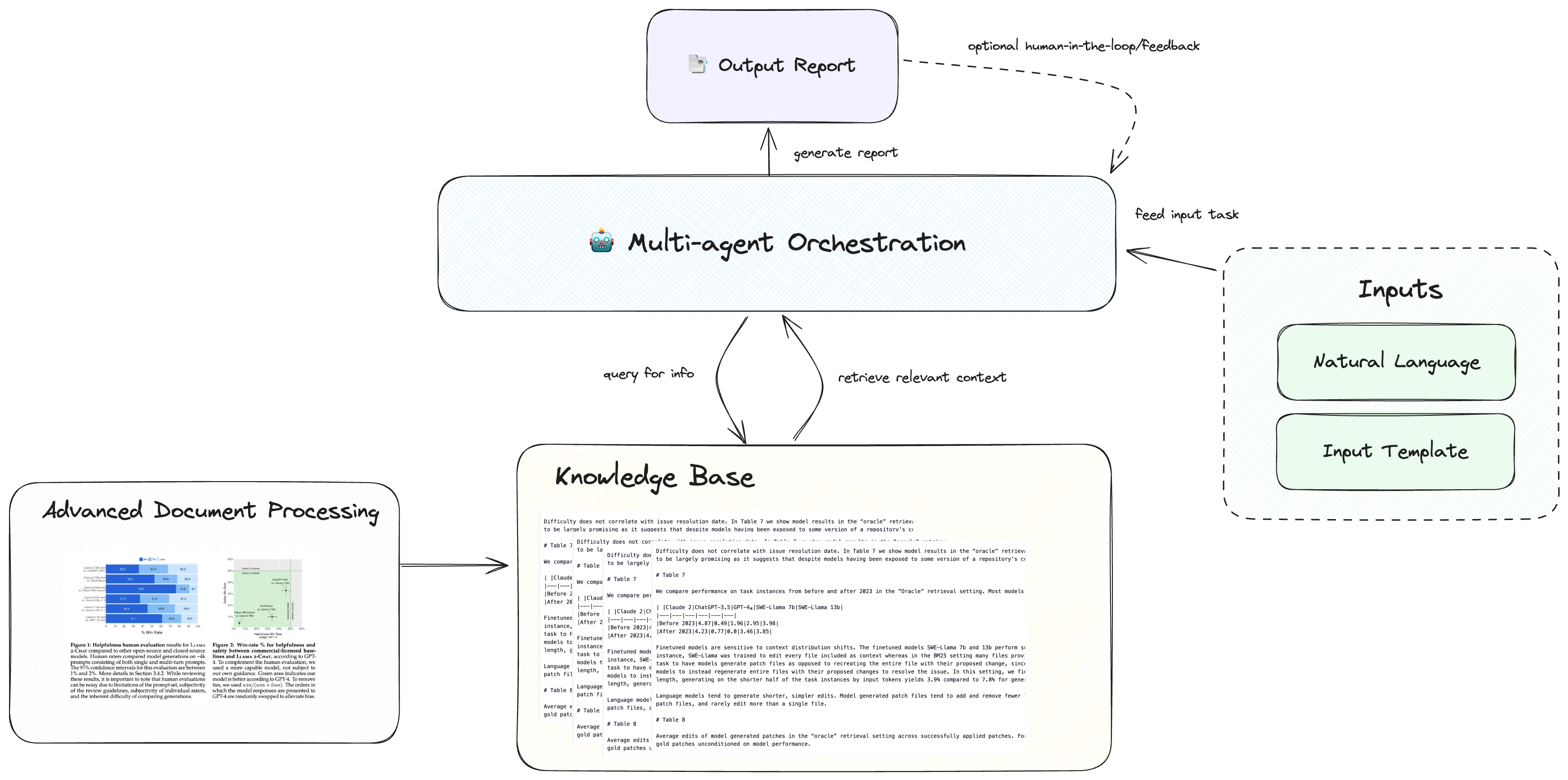

4. Multi-Agent Workflow Architecture

Rather than relying on a single LLM to generate the entire report, breaking the task into specialized agent roles produces better results. A typical workflow involves a researcher agent that retrieves and evaluates information, a writer agent that generates properly formatted content, and an editor agent that reviews and refines the output. This division of labor mirrors human writing teams and leads to higher quality outputs.

5. Template Processing System

Many real-world reports follow existing templates or formats. Your system needs to parse these templates into executable plans, extract style guidelines, and map sections to required information types. This ensures that generated reports match existing organizational standards and practices.

Putting It All Together

These building blocks work together in a pipeline. When a report generation request comes in, the template processor analyzes the required format. The researcher agent then queries the knowledge base and builds an information cache. Next, the writer agent generates content following the structured output definition, and the editor agent reviews and refines the output before final delivery.

This architecture offers significant advantages in terms of automation and consistency, though it comes with important considerations around quality control and the need for human review of critical documents.

Getting Started

At LlamaIndex, we're committed to helping developers evolve from basic RAG applications to sophisticated knowledge assistants capable of report generation. This transition represents the next frontier in AI-assisted knowledge work, and we've built core components to make it possible:

- LlamaCloud is our enterprise RAG platform that helps users ETL their unstructured data into a format optimized for report generation. It handles multimodal content processing and indexing while maintaining document structure - critical for accurate report generation.

- LlamaParse provides advanced document parsing for complex documents with tables, diagrams, and intricate layouts. It ensures your report generation system has high-quality, well-structured data to work with.

- LlamaIndex Workflows offers event-driven agent workflow orchestration for coordinating the multiple specialized agents needed in report generation.

These components work together to solve the key challenges in report generation: processing complex documents, maintaining high-quality knowledge bases, and orchestrating multi-agent workflows. To help you get started, we've created a set of notebooks demonstrating the techniques:

- Multimodal report generation

- Financial report analysis

- Excel template filling

- RFP response generation

Each notebook provides a complete, working example that you can adapt for your specific needs. Our goal is to make these advanced capabilities accessible to every developer, backed by our production-ready framework and community support.

The Future of Knowledge Work

Moving beyond basic RAG to full report generation represents a significant step forward in AI-assisted knowledge work. While the architecture is more complex, the potential for automation and efficiency gains makes it a worthwhile investment. By thoughtfully implementing these building blocks, you can create systems that not only answer questions but actually produce the kinds of outputs that knowledge workers spend hours creating manually. This gets us closer to the vision of AI systems that can truly augment and enhance human cognitive work.

To get started with LlamaIndex, join our Discord community, or explore our documentation. Get started with LlamaIndex workflows here.

To get started with LlamaParse/LlamaCloud, sign up here. If you’re interested in production-level knowledge management within the enterprise, come talk to us.