This is a guest post from our friends at Mozilla about Llamafile

llamafile, an open source project from Mozilla, is one of the simplest ways to run a large language model (LLM) on your laptop. All you have to do is download a llamafile from HuggingFace then run the file. That's it. On most computers, you won't need to install anything.

There are a few reasons why you might want to run an LLM on your laptop, including:

1. Privacy: Running locally means you won't have to share your data with third parties.

2. High availability: Run your LLM-based app without an internet connection.

3. Bring your own model: You can easily test many different open-source LLMs (anything available on HuggingFace) and see which one works best for your task.

4. Free debugging/testing: Local LLMs allow you to test many parts of an LLM-based system without paying for API calls.

In this blog post, we'll show how to set up a llamafile and use it to run a local LLM on your computer. Then, we'll show how to use LlamaIndex with your llamafile as the LLM & embedding backend for a local RAG-based research assistant. You won't have to sign up for any cloud service or send your data to any third party--everything will just run on your laptop.

Note: You can also get all of the example code below as a Jupyter notebook from our GitHub repo.

Download and run a llamafile

First, what is a llamafile? A llamafile is an executable LLM that you can run on your own computer. It contains the weights for a given open source LLM, as well as everything needed to actually run that model on your computer. There's nothing to install or configure (with a few caveats, discussed here).

Each llamafile bundles 1) model weights & metadata in gguf format + 2) a copy of `llama.cpp` specially compiled using [Cosmopolitan Libc](https://github.com/jart/cosmopolitan). This allows the models to run on most computers without additional installation. llamafiles also come with a ChatGPT-like browser interface, a CLI, and an OpenAI-compatible REST API for chat models.

There are only 2 steps to setting up a llamafile:

1. Download a llamafile

2. Make the llamafile executable

We'll go through each step in detail below.

Step 1: Download a llamafile

There are many llamafiles available on the HuggingFace model hub (just search for 'llamafile') but for the purpose of this walkthrough, we'll use TinyLlama-1.1B (0.67 GB, model info). To download the model, you can either click this download link: TinyLlama-1.1B or open a terminal and use something like `wget`. The download should take 5-10 minutes depending on the quality of your internet connection.

text

wget https://huggingface.co/Mozilla/TinyLlama-1.1B-Chat-v1.0-llamafile/resolve/main/TinyLlama-1.1B-Chat-v1.0.F16.llamafile This model is small and won't be very good at actually answering questions but, since it's a relatively quick download and its inference speed will allow you to index your vector store in just a few minutes, it's good enough for the examples below. For a higher-quality LLM, you may want to use a larger model like Mistral-7B-Instruct (5.15 GB, model info).

Step 2: Make the llamafile executable

If you didn't download the llamafile from the command line, figure out where your browser stored your downloaded llamafile.

Now, open your computer's terminal and, if necessary, go to the directory where your llamafile is stored: `cd path/to/downloaded/llamafile`

If you're using macOS, Linux, or BSD, you'll need to grant permission for your computer to execute this new file. (You only need to do this once.):

If you're on Windows, instead just rename the file by adding ".exe" on the end e.g. rename `TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile` to `TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile.exe`

text

chmod +x TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafileKick the tires

Now, your llamafile should be ready to go. First, you can check which version of the llamafile library was used to build the llamafile binary you should downloaded:

text

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile --version

llamafile v0.7.0This post was written using a model built with `llamafile v0.7.0`. If your llamafile displays a different version and some of the steps below don't work as expected, please post an issue on the llamafile issue tracker.

The easiest way to use your llamafile is via its built-in chat interface. In a terminal, run

sh

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafileYour browser should open automatically and display a chat interface. (If it doesn't, just open your browser and point it at http://localhost:8080). When you're done chatting, return to your terminal and hit `Control-C` to shut down llamafile. If you're running these commands inside a notebook, just interrupt the notebook kernel to stop the llamafile.

In the rest of this walkthrough, we'll be using the llamafile's built-in inference server instead of the browser interface. The llamafile's server provides a REST API for interacting with the TinyLlama LLM via HTTP. Full server API documentation is available here. To start the llamafile in server mode, run:

sh

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile --server --nobrowser --embeddingSummary: Download and run a llamafile

sh

# 1. Download the llamafile-ized model

wget https://huggingface.co/Mozilla/TinyLlama-1.1B-Chat-v1.0-llamafile/resolve/main/TinyLlama-1.1B-Chat-v1.0.F16.llamafile

# 2. Make it executable (you only need to do this once)

chmod +x TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile

# 3. Run in server mode

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile --server --nobrowser --embeddingBuild a research assistant using LlamaIndex and llamafile

Now, we'll show how to use LlamaIndex with your llamafile to build a research assistant to help you learn about some topic of interest--for this post, we chose homing pigeons. We'll show how to prepare your data, index into a vector store, then query it.

One of the nice things about running an LLM locally is privacy. You can mix both "public data" like Wikipedia pages and "private data" without worrying about sharing your data with a third party. Private data could include e.g. your private notes on a topic or PDFs of classified content. As long as you use a local LLM (and a local vector store), you won't have to worry about leaking data. Below, we'll show how to combine both types of data. Our vector store will include Wikipedia pages, an Army manual on caring for homing pigeons, and some brief notes we took while we were reading about this topic.

To get started, download our example data:

sh

mkdir data

# Download 'The Homing Pigeon' manual from Project Gutenberg

wget https://www.gutenberg.org/cache/epub/55084/pg55084.txt -O data/The_Homing_Pigeon.txt

# Download some notes on homing pigeons

wget https://gist.githubusercontent.com/k8si/edf5a7ca2cc3bef7dd3d3e2ca42812de/raw/24955ee9df819e21975b1dd817938c1bfe955634/homing_pigeon_notes.md -O data/homing_pigeon_notes.mdNext, we'll need to install LlamaIndex and a few of its integrations:

sh

# Install llama-index

pip install llama-index-core

# Install llamafile integrations and SimpleWebPageReader

pip install llama-index-embeddings-llamafile llama-index-llms-llamafile llama-index-readers-webStart your llamafile server and configure LlamaIndex

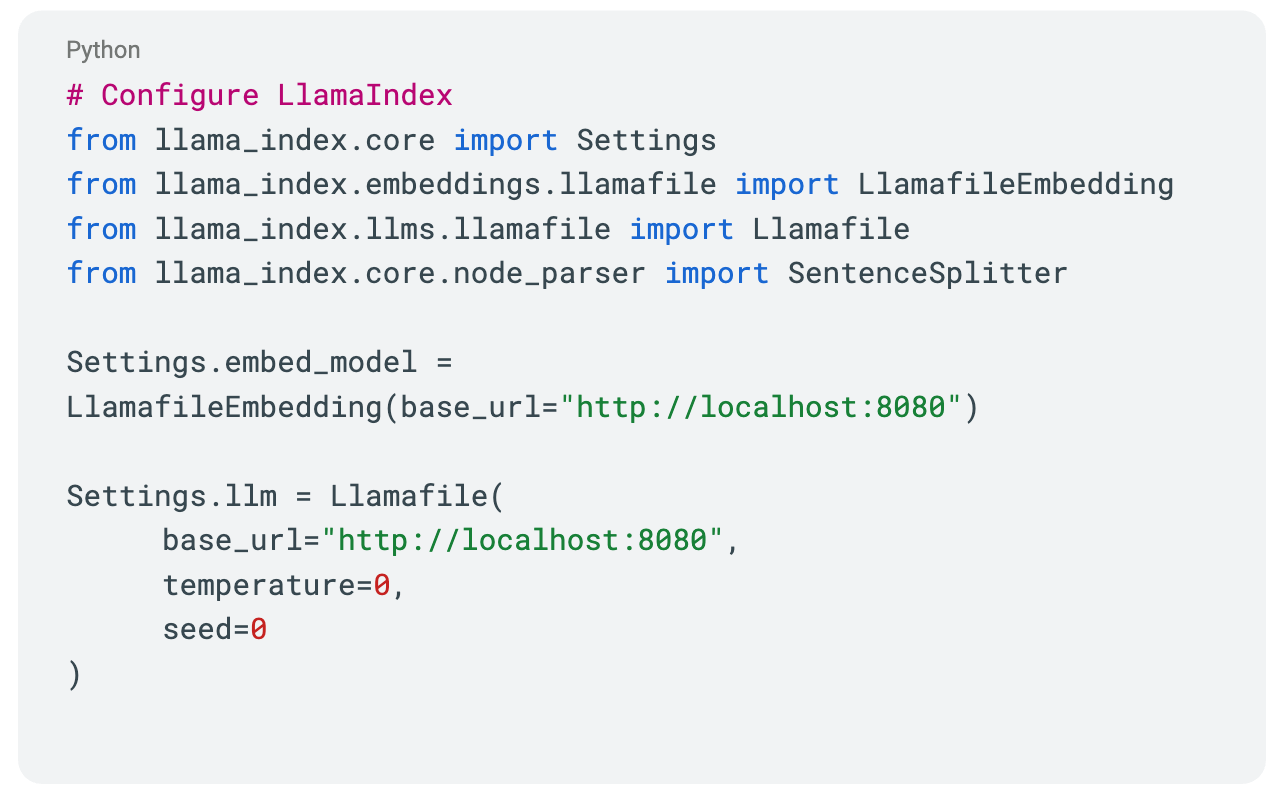

In this example, we'll use the same llamafile to both produce the embeddings that will get indexed in our vector store and as the LLM that will answer queries later on. (However, there is no reason you can't use one llamafile for the embeddings and separate llamafile for the LLM functionality--you would just need to start the llamafile servers on different ports.)

To start the llamafile server, open a terminal and run:

sh

./TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile --server --nobrowser --embedding --port 8080Now, we'll configure LlamaIndex to use this llamafile:

python

# Configure LlamaIndex

from llama_index.core import Settings

from llama_index.embeddings.llamafile import LlamafileEmbedding

from llama_index.llms.llamafile import Llamafile

from llama_index.core.node_parser import SentenceSplitter

Settings.embed_model = LlamafileEmbedding(base_url="http://localhost:8080")

Settings.llm = Llamafile(

base_url="http://localhost:8080",

temperature=0,

seed=0

)

# Also set up a sentence splitter to ensure texts are broken into semantically-meaningful chunks (sentences) that don't take up the model's entire

# context window (2048 tokens). Since these chunks will be added to LLM prompts as part of the RAG process, we want to leave plenty of space for both

# the system prompt and the user's actual question.

Settings.transformations = [

SentenceSplitter(

chunk_size=256,

chunk_overlap=5

)

]Prepare your data and build a vector store

Now, we'll load our data and index it.

python

# Load local data

from llama_index.core import SimpleDirectoryReader

local_doc_reader = SimpleDirectoryReader(input_dir='./data')

docs = local_doc_reader.load_data(show_progress=True)

# We'll load some Wikipedia pages as well

from llama_index.readers.web import SimpleWebPageReader

urls = [

'https://en.wikipedia.org/wiki/Homing_pigeon',

'https://en.wikipedia.org/wiki/Magnetoreception',

]

web_reader = SimpleWebPageReader(html_to_text=True)

docs.extend(web_reader.load_data(urls))

# Build the index

from llama_index.core import VectorStoreIndex

index = VectorStoreIndex.from_documents(

docs,

show_progress=True,

)

# Save the index

index.storage_context.persist(persist_dir="./storage")Query your research assistant

Finally, we're ready to ask some questions about homing pigeons.

python

query_engine = index.as_query_engine()

print(query_engine.query("What were homing pigeons used for?"))text

Homing pigeons were used for a variety of purposes, including military reconnaissance, communication, and transportation. They were also used for scientific research, such as studying the behavior of birds in flight and their migration patterns. In addition, they were used for religious ceremonies and as a symbol of devotion and loyalty. Overall, homing pigeons played an important role in the history of aviation and were a symbol of the human desire for communication and connection.python

print(query_engine.query("When were homing pigeons first used?"))text

The context information provided in the given context is that homing pigeons were first used in the 19th century. However, prior knowledge would suggest that homing pigeons have been used for navigation and communication for centuries.Conclusion

In this post, we've shown how to download and set up an LLM running locally via llamafile. Then, we showed how to use this LLM with LlamaIndex to build a simple RAG-based research assistant for learning about homing pigeons. Your assistant ran 100% locally: you didn't have to pay for API calls or send data to a third party.

As a next step, you could try running the examples above with a better model like Mistral-7B-Instruct. You could also try building a research assistant for different topic like "semiconductors" or "how to bake bread".

To find out more about llamafile, check out the project on GitHub, read this blog post on bash one-liners using LLMs, or say hi to the community on Discord.