RAG is only as Good as your Data

Building production-ready LLM applications is hard. We've been chatting with hundreds of users, ranging from Fortune 500 enterprises to pre-seed startups and here's what they tell us they struggle with:

- Data Quality Issues: Most companies deal with large sets of complex, heterogeneous documents. Think PDFs with messy formatting, images, tables across multiple pages, different languages - the list goes on. Ensuring high-quality data input is crucial. "Garbage in, garbage out" holds especially true for LLM applications.

- Scalability Hurdles: Each new data source requires significant engineering hours for custom parsing and tuning. Keeping data sources in sync isn't easy either.

- Accuracy Concerns: Bad retrievals and hallucinations are common problems when LLMs interact with enterprise data, leading to unreliable outputs.

- Configuration Overload: Fine-tuning LLM applications involves numerous parameters and often requires deep technical expertise, making iterative improvement a daunting task.

As developers shift from prototypes towards building production applications - complex orchestration is needed and they want to centralize their abstractions for managing their data. They want a unified interface for processing and retrieving over their diverse sources of data.

To address these difficulties, we soft-launched LlamaCloud and made LlamaParse widely available a few months ago to bring production-grade context-augmentation to your LLM and RAG applications. LlamaParse can already support 50+ languages and 100+ document formats. The adoption has been incredible - we have grown to tens of thousands of active users for LlamaParse who have processed tens of million pages! Here’s an example from Dean Barr, Applied AI Lead at Carlyle:

As an AI Applied Data Scientist who was granted one of the first ML patents in the U.S., and who is building cutting-edge AI capabilities at one of the world's largest Private Equity Funds, I can confidently say that LlamaParse from LlamaIndex is currently the best technology I have seen for parsing complex document structures for Enterprise RAG pipelines. Its ability to preserve nested tables, extract challenging spatial layouts, and images is key to maintaining data integrity in advanced RAG and agentic model building.

The Rise of Centralized Knowledge Management

We have designed LlamaCloud to cater to the need of production-grade context-augmentation for your LLM and RAG applications. Let's take a tour of what LlamaCloud brings to the table:

- LlamaParse: Our state-of-the-art parser that turns complex documents with tables and charts into LLM-friendly formats. You can learn more about LlamaParse here.

- Managed Ingestion: Connect to enterprise data sources and your choice of data sinks with ease. We support multiple data sources and are adding more. LlamaCloud provides default parsing configurations for generating vector embeddings, while also allowing deep customization for specific applications.

- Advanced Retrieval: LlamaCloud allows basic semantic search retrieval as well as advanced techniques like hybrid search, reranking, and metadata filtering to improve the accuracy of the retrieval. This provides the necessary configurability to build end to end RAG over complex documents.

- LlamaCloud Playground: An interactive UI to test and refine your ingestion and retrieval strategies before deployment.

- Scalability and Security: Handle large volumes of production data. Compliance certifications as well as deployment options are available based on your security needs.

This video gives a detailed walk through of LlamaCloud:

Our customers tell us that LlamaCloud enables developers to spend less time setting up and iterating on their data pipelines for LLM use cases, allowing them to iterate through the LLM application development lifecycle much more quickly. Here’s what Teemu Lahdenpera, CTO at Scaleport.ai had to say:

LlamaCloud has really sped up our development timelines. Getting to technical prototypes quickly allows us to show tangible value instantly, improving our sales outcomes. When needed, switching from the LlamaCloud UI to code has been really seamless. The configurable parsing and retrieval features have significantly improved our response accuracy.

We've also seen great results with LlamaParse and found it outperforming GPT-4 vision on some OCR tasks!

Try it yourself

We’ve opened up an official waitlist for LlamaCloud. Here's how you can get involved:

- Join the LlamaCloud Waitlist: Sign up here.

- Get in Touch: Have questions? Want to discuss unlimited commercial use? Contact us and let's chat! Note: we support private deployments for a select number of enterprises

- Stay Updated: Follow us on Twitter and join our Discord community to stay in the loop.

In the meantime, anyone can create an account at https://cloud.llamaindex.ai/. While you’re waiting for official LlamaCloud access, anyone can immediately start using our LlamaParse APIs.

We’re shipping a lot of features in the next few weeks. We look forward to seeing the context-augmented LLM applications that you can build on top of LlamaCloud! 🚀🦙

FAQ

Have you got some examples of how to use LlamaCloud?

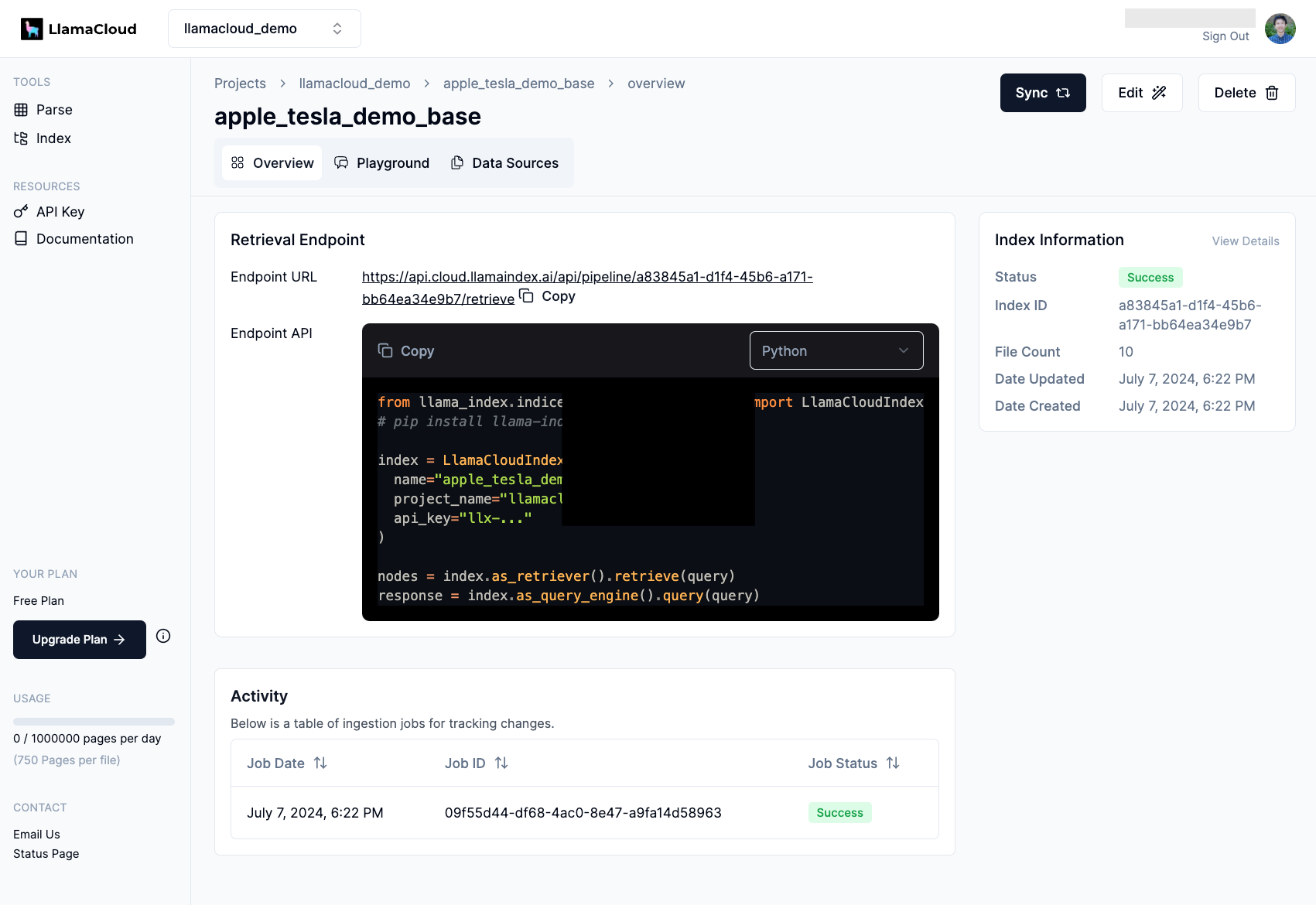

We sure do! One of the strengths of LlamaCloud is how easily the endpoints integrate into your existing code. Our llamacloud-demo repo has lots of examples from getting started to running evaluations.

Is this competitive with vector databases?

No. LlamaCloud is focused primarily on data parsing and ingestion, which is a complementary layer to any vector storage provider. The retrieval layer is orchestration on top of an existing storage system. LlamaIndex open-source integrates with 40+ of the most popular vector databases, and we are integrating LlamaCloud with storage providers based on customer requests.