In the world of AI-driven business solutions, an efficient and responsive AI sales assistant can greatly enhance internal operations, particularly for sales teams who need accurate information at their fingertips. In this case study, we explore the journey of building an AI sales assistant for NVIDIA's sales representatives using LlamaIndex and NVIDIA Inference Microservices. This solution provides seamless access to internal data, ensuring the team gets the information they need, exactly when they need it.

The Challenge

Sales teams face the challenge of quickly accessing specific information related to internal resources, products, and documentation. They need an AI solution that can answer complex internal queries accurately and efficiently, but also go beyond retrieval-based answers to assist with tasks like translation or drafting emails. NVIDIA began a project to create a robust, interactive AI interface that would improve a sales team's productivity while being easy to use.

The Solution: LlamaIndex + NVIDIA NIM

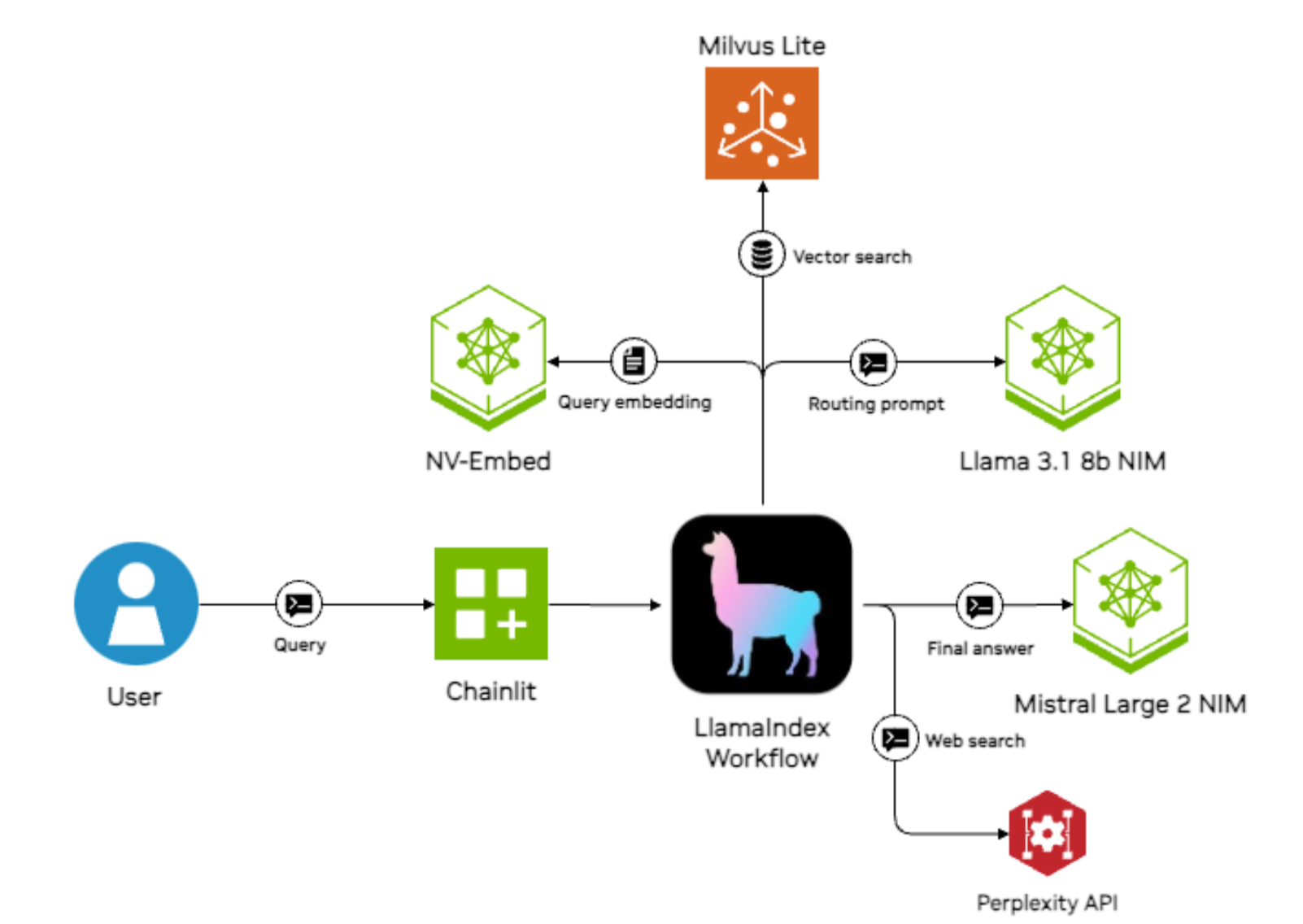

To address these challenges, NVIDIA built an AI sales assistant using LlamaIndex and NVIDIA NIM, combining the strengths of retrieval-augmented generation (RAG) with powerful AI models for enhanced performance. LlamaIndex provided the foundation for managing and querying internal knowledge, while NIM microservices offered scalable inference services for various large language models, ensuring low-latency responses.

The user interface was created with Chainlit, which provided an interactive chat environment that made querying the AI assistant intuitive for users.

Implementation Process

The implementation process for the AI sales assistant involved several key components:

● Pipeline Setup: The project began by designing a streamlined pipeline that would allow the system to handle multiple types of requests—both RAG and non-RAG queries. This ensured flexibility for the sales reps in the types of questions they could ask.

● Core Functionality with LlamaIndex: LlamaIndex Workflows was used to build the core retrieval and interaction capabilities, and made it possible to easily extend the AI's functionalities, allowing for precise control over how queries were processed and answered.

● Enhanced Performance with NVIDIA NIM: NVIDIA NIM was integrated to handle inference tasks with advanced AI models like Llama 3.1 70b. NIM enabled seamless deployment and inference management, providing high availability and lower latency than typical enterprise APIs.

● User Interface with Chainlit: The Chainlit UI was used to create a chat-based interface where sales reps could interact with the AI assistant. The UI provided feedback on the processing steps, offering transparency on how each response was generated.

Key Features

● Workflow Implementation: The system featured a robust Workflow for handling different types of requests—ranging from document retrieval to direct task execution.This allowed for versatile interactions, such as translating documents or answering in-depth product questions.

● Routing: Depending on the nature of the user’s question, the query was routed directly to a top-performing open LLM, Llama 3.1 405b, or to a multisource RAG pipeline using Llama 3.1 70b for faster and cheaper execution with large numbers of documents.

● Parallel retrieval: Users expressed desire to source documents for RAG from multiple sources, including our internal document repositories as well as the NVIDIA website, and finally from the open internet. We used a combination of dense retrieval, web search, and use of the Perplexity API to meet these requirements.

● Context augmentation: We have a large number of acronyms for business units, initiatives, and products. By using a Workflow, it was much easier to design a modular system where user queries or documents were augmented with information about acronyms, abbreviations, or other important names.

Results and Benefits

The AI sales assistant project significantly improved query response times, which enables the sales team to quickly find relevant information. The inference is real-time with NVIDIA NIM's optimized language models with LlamaIndex's structured retrieval. Furthermore, the intuitive Chainlit interface made it easy for users to interact with the AI without needing technical knowledge, boosting adoption and satisfaction among the sales reps.

Challenges Overcome

One of the major challenges was balancing performance with resource utilization. By leveraging NVIDIA NIM for managing inference workloads, the team ensured high performance without overwhelming infrastructure resources. Another challenge was ensuring that non-RAG tasks were appropriately routed to avoid unnecessary retrieval operations, which was effectively solved through custom workflows in LlamaIndex.

Conclusion

The development of the AI sales assistant highlights the powerful capabilities of combining LlamaIndex Workflows and NVIDIA NIM to create efficient, scalable AI solutions. The project successfully addresses the need for an intelligent, versatile assistant that can handle a wide range of internal queries. If you're looking to build similar AI-powered solutions, leveraging these technologies can help you rapidly develop robust and user-friendly applications.